Hello world,

recently I had a mission to analyze the Launch implementation of a client. The main concern was, as usual, the website team complains that Launch uses a lot of resources and is blocking the rendering of the page. Also, the Launch library is quite large and that it should be optimized.

As I work(ed) for this client for a long time, I knew that some of these concerns were well-placed. Some problems have good reason to stay like it is and some do not.

I took the opportunity of this review to show you how I handled the analysis, it is also an opportunity to recap what an optimized version of Launch is, how Launch actually behave and so on…

Basically showing what I do as a consultant when on Launch topic.

When you develop an expertise on a domain, you start to realize that it is possible to optimize and approach the implementation mostly focusing on client needs & use-case more than with technology.

If you are familiar with this meme, I created a new version for you:

In my case, I will focus on Launch and the list of chapters will be the following:

- Launch setup & capability

- Async, defer and implication

- Launch Architecture

- Launch runTime behavior

- Summary

Launch setup & capability

The first thing you want to do when you are realizing an analysis of a tag container is to look at how much the size of the library represents on the client website.

There are different ways to present that information:

| Element | Size |

| Website Loaded library (compressed and minify) | 260 Kb |

| Full Script (minified) | 1 340 Kb |

| Full Script (unminified) | 2 620 Kb |

We can already see that there is a lot of optimization that is done on the minimization of the code and the compression of the file during data transfer.

Also, Launch has several other methods to optimize code weights that we are going to discuss later.

It is important to get the minified and unminified size as we will get to that later.

One other important setup to verify is to know if you are using an async implementation. For performance optimization, it is always preferred to use the asynchronous method. It is the default implementation proposed by Launch.

Nevertheless, there are moment when you cannot use it. Also, many people mix async and defer setup.

This is the perfect time for me to explore these concepts a bit more.

Async, Defer and implication

Async is the capability to tell the browser to do its own thing when the resources is being ready to be downloaded.

The thing is that the resource you are requesting (in the case of a TMS, a JS file) require to (usually) resolve a bunch of things (domain resolution, connecting to the resource,…) before it can start download and execute the resource.

Async allows the browser to realize this in the background. It is delegated to the service to let the browser know when there are ready to start the download.

Defer is the capability to tell the browser that this resource is not a priority and therefore the download of that resource is passed to the end of the resources (=after the browser downloaded all resources that did not have this defer flag).

Using Defer and Asynchronous implementation have some implication. One major is that you are never sure when the code is executed exactly and which resources will have been loaded when your code executes.

In the case of my client, they didn’t use async (or defer for that matters) and passing the loading to asynchronous would have helped on the performance, however, as we are talking about async and not defer. The gain is a still limited to the connection time, so do not expect miracle when it comes to that.

Launch Architecture

Launch has a certain “preferred” architecture. A way that is is supposed to work.

You normally have rules that are containers of components, deciding what is going to be triggered. The rule is composed of 3 things:

- Event (your trigger)

- Condition

- Action (what is being triggered)

The event part reacts to … an event.

The condition checks during the runtime of the code, check the logic and prevent or allow the rule to execute.

The action part is the actual code that is normally getting executed as JavaScript. You can run pure JavaScript or decide to execute action predefined for you via some extensions.

Extensions are JavaScript libraries that can simplify implementation. You have several extensions and the most used one are probably the Adobe Analytics or Experience Cloud ID service extensions that wrapped the AppMeasurement and visitor ID service.

You can have multiple events, conditions and actions in one rule that related to different extensions.

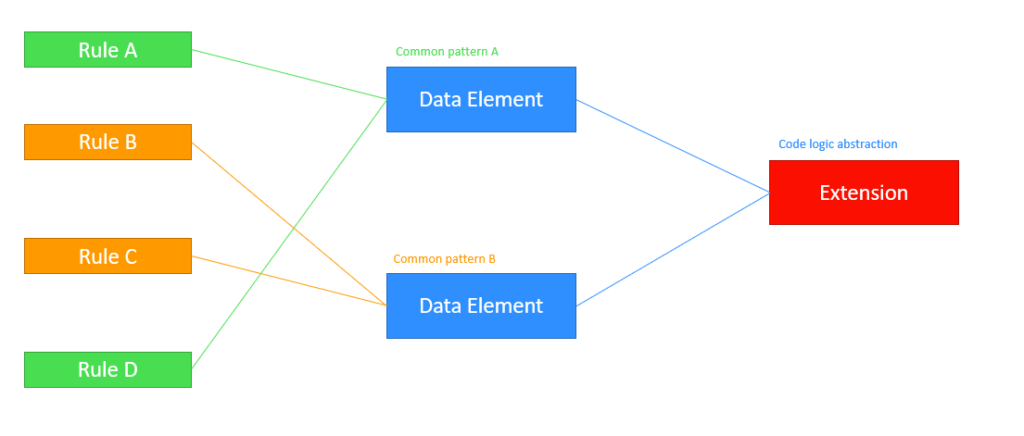

Pretty often, you do have code that get repeated on multiple rules or condition that are always checking a specific value. You can combine that into what is called a Data Element. It is a piece of code that centralize your variables and that you can call within any components or extension.

Normally the preferred architecture is to have this layer of abstraction:

You identify common pattern or values used in different rules and create a single data element. If the code logic used to build that data element is always the same, you try to abstract the logic in an extension.

At the end, it is to follow the DRY principle (Don’t Repeat Yourself).

Theory & application

As you try to reduce code duplication and to not repeat the different components, you understand pretty easily that the ideal scenario is to create as fewer rules as possible and as fewer data element as possible.

This is idealistic but with a large organization, spread around several markets, that needs to keep an overview, this minimal configuration brings some challenges. One of the most important one is the ability to quickly identify and act on market specific configuration.

If you were to create one rule for all 3rd party tags per example, it would have been impossible to understand the market concern by a 3rd party tag, except going to the rule and looking in the code.

If you were having one rule per provider, you do not which market is using which provider, except going inside the rule itself again.

All of these considerations are not technical consideration but governance processes that needs to be considered.

You could argue that we can split the property of Launch (the higher level container that contains rules, data elements and extensions) into different properties per market. That will ensure that each property to have a minimum amount of code, but that will result in duplication of common code… which is not ideal for maintenance. The cost of creating duplication is pretty low, you can even automate that part if you are capable enough… the maintenance cost, however, is pretty high.

Therefore, in a single property, several markets that have different configuration and legal consent status needed to co-exist for the sake of standardization of implementation (mainly for Analytics).

This kind of setup is pretty common in the TMS world and there is no magic button that I know to solve that. There are tradeoff and clients need to decide the solution they are the most comfortable with. In my case, one rule per provider (Facebook, Google, etc…) and per market.

Using the API of Launch, it ended up with getting the following information for my property:

| Elements | Data |

| Rules | 1 263 |

| Rules Components | 4 633 |

| Data Element | 119 |

But most importantly:

| Elements | Data |

| Rules Size | 1 057 Kb |

| Rules Real Size | 4 105 Kb |

| Data Element Size | 346 Kb |

You can see that I was able to calculate the size that the rule and data elements were using.

Here it is important to know a few things:

- Using the API will allow you to get the code of your rule unminified and unzip

- Extension code can hardly be optimized because it has been coded for you by the extension developer.

- Data Elements code is always loaded in the main Launch JS library.

- Not all of the rules will have their action code included in the main Launch JavaScript library (we’ll discuss that in the next section)

- We can differentiate the Rule Size (that is loaded in the main library) and the Rule Real Size (that is the whole code of the rule)

Naming convention & Analysis

Here the important part comes for my analysis and it is relying on an important aspect that is not technical:

The rule naming convention

Many things have been said about this but we clearly need to understand that this aspect is one of the most important element of your Tag Management System. For maintenance reason, but also for analysis and ease of use.

This part is not technical and requires a good understanding on how you want to work and monitor your TMS implementation.

Jim Gordon came with a good idea of a naming convention for Launch: https://jimalytics.com/tag-management/adobe-launch-naming-conventions/ You should check it out.

We decided our naming convention before the article came out, but it was “strangely” very similar anyway. In our case, we had several scopes.

The Market, of course, but also the page of the website or the event.

This allows me to realize a more advanced analysis of the property, such as:

- Top Market distribution

- Top 10 markets represents 62% of the size

- Global rules (in all markets) represents 35% of the code size

We will see that this is mainly related to Adobe Analytics implementation.

- Top Provider distribution

- Adwords had 185 occurrences in the implementation. Adform 170

- Average size of a provider is around 500 bytes.

- Adobe rule have an average size of 7 Kb though

- But you can also see it for market distribution:

- Adwords (again) was present in 37 markets, but Adform only in 26.

- Finally, the most import distribution would be by rule type:

- Direct Call Rules were representing only 33 rules

- Window Loaded event represented 1 177 rules

All of this information gives us a clear indication on how the property has been setup.

Here we can use the information we get from our initial size estimation to guess the impact of the rules in the library load size.

If you remember, we have been able to detect that the library size is 2 600 Kb unminified, 1 400 kb minified and 260 kb compressed.

We can apply these ratios to have the estimate, as the Launch API provides the unminified version of your code.

Following that information, I do not believe that there is a clear path forward.

What is clear is the origin of the Launch library size and possible path for optimization, but at the end, there are other consideration going around maintenance and governance that needs to be considered.

Also, we are currently missing an important piece of understanding for the analysis to be complete: Understanding how that is translated to the Launch runtime behavior.

Launch runTime Behavior

As we described before, Launch has some capability to optimize its size and it also had some capabilities for optimization at runTime.

There is a good article on medium on what is being considered for inclusion in the main Launch library.

https://medium.com/adobetech/build-optimization-through-minimal-inclusion-87ea637e174f

This will give you details on the behavior that I will explain below.

When you load Launch on your website, the main library is called for download. We already explained what the async tag does and how it can help on website performance.

Once the download is started, you can find the following sequence happening on your Launch script.

- Extensions are loaded

- Data Elements are loaded

- Extensions configuration are executed

- Rules are loaded

- Rules that have “library loaded” as the trigger are loaded

When you look at the code contained in that container specifically, you can see the whole code of the Extension and the Data Elements are loaded.

It makes sense because Extensions need to execute directly after load, so downloading it at the same time of the library is needed. Data Elements can be referenced in the Extension, so they also require the code to be available as fast as possible.

For the rules, you can see that there are rules that can be triggered when the Launch library is loaded. That creates 2 different behavior for the rules, and especially for the rule actions:

- If the rule is to be executed at library-loaded event, the whole code is downloaded

- If the rule does not need to execute at library-loaded event, then we can extract the action in a separate container, that will be call upon request.

The implication is that by setting your rule to be fired/executed at DOM ready or Window Loaded event, the action code is loaded asynchronously and executed afterwards.

That is a clever optimization that reduce the size of the main library and allow for smaller library.

One small details though, this optimization behavior is only available for the custom code action, using the CORE extension.

If you are using Adobe Analytics Extension and the custom code inside that action, the code is loaded anyway.

There is no extension, except the CORE extension, that provides this type of optimization, to my knowledge.

Therefore, in my analysis, you can see that I separated Size and Real Size.

- Size represents the size that is supposed to be loaded within the main Launch library

- Real Size represents that complete size that will be loaded on the website, once the rule is fired.

It means that for “library-loaded” event rule, the size and the real size of the action will be similar, not for the other rules. In the script analyzing the code of your property, you need to account for that…. except if the code is coming from the Adobe Analytics extension (or any other Extensions that are not Core extension).

Summary

Now that we have a clear understanding of Launch composition, Launch behavior and our property composition. We can plan for some strategy focus on size reduction.

Unfortunately, it is bringing quite a basic set of recommendations, but now you can prove it by A+B demo and with data and fact.

On my analysis, I was able to identify the following part:

- Asynchronous capability needs to be set, if not already, in all markets. Even though the improvement won’t be major.

- Adobe Analytics custom code was the main contributor of library weight for my client

- 3rd party tags code were optimized by Launch to be loaded afterwards, however their sheer numbers counterbalance this optimization. Even with optimization, a thousand time loading 100 kb is still huge.

- 3rd party tags are quite diverse and main require optimization with Marketing team.

Adwords, Doubleclick and Gtag (all Google tags) are representing 43% of the 3rd party tags in place (506 Tags for 40 markets). When gtag is supposed to replace most of the Google tags, there may be potential optimization here. - There was not a lot of Library-loaded tags used when not required, but that could have been an opportunity. Described above, we see that this event impact directly library weight.

As explained previously, this is not always a technical discussion, your client (or yourself) need to find the right balance between optimization and maintenance.

You can have a very optimized setup but because the Tag Management System is something you need to modify quite frequently, you need to consider the cost of the maintenance. The Naming convention, directly impacting the number of rule created, is a good example, this is a governance vs execution battle.

We explained that Launch has 2 layers of abstraction possible: data elements and extensions. This can help you to optimize the implementation but there are some pitfalls:

- Moving code from custom code to a data element is not beneficial if this code is not used multiple times in your property.

- I personally feel it is even worse as you lose context of where this code is executed (increase maintenance cost)

- Moving code contained in rules not triggered in library-loaded event to data element can be suboptimal.

As we have seen, Launch already optimized the code contained in these rules by having a separate container loaded later for them. - Building Extension(s) that optimize the code and help you apply the DRY principle is the best strategy… if you have resources capable to build this kind of extension. The no-code strategy is a very code-intensive strategy at some point. Someone needs to write that abstraction layer.

One big aspect of my client, and for clients that have very large implementation in general, is that they rarely have internal resources handling the Tag Manager, and it is a paradox as they would be able to create a full time position for that. They usually delegate that to agencies, and agencies people come and go, you need to understand the cost of entry to learn the Tag manager as well.

Having a very optimized setup that very few high skilled people can touch is also proving to be high cost and pretty ineffective.

The analytics implementation issue

Finally, we have this Adobe Analytics implementation weight that is looming over, and I kept for the end of the article. As you could see, this is THE major contributor for code weight.

Is Adobe Analytics that bad in code management?

Without trying to save Adobe tool because I am from Adobe, I understand that this code weight has a big reason for existing, and it didn’t come overnight. Analytics tracking usually require a lot of logic, not only for Adobe, as you need to have consistent setup and data information provided, independently of where the tag is located.

Most of the websites I know rely on a datalayer to provide this information. However, what I often see is that the implementation of the datalayer is view as a single project that live independently of the website construction and do not really fall into the web analytics responsibility, but a mix of web development and analytics specification.

That is bound to create some discrepancy overtime between data lying in the datalayer and what should be tracked for the analytics tool. This was the case for the client, and because the budget to fix this in the website implementation or in the tag manager is in favor of the TMS (because resource is cheaper), you are ending up with workarounds.

The workarounds are built with code and small workarounds just pile up over time creating a monster. The cost-effectiveness of the workaround didn’t account for the maintenance cost that this will create.

If you are unfortunate enough, you will even end-up with workarounds of workarounds.

This is the main reason of the website implementation, as they live on a legacy Data Layer definition and for that cause, very few possible optimization are available.

The client partner was able to build up an extension that abstract most of the code logic that was required on almost every data layer call but the meta logic (considering the page, the switch between data layer element) cannot be solved programmatically.

The only possible optimization there is to clean and improve the data layer. Surprisingly (or unsurprisingly), this is a very difficult budget to accept. The Data Layer optimization will make your optimization… works the same way (in one of the best case scenario). Sure, the workarounds will disappear and performance of the website will improve… but hard to estimate by how much, the same goes for cost maintenance as this will create implementation opportunities that will eat budget. Many things that were not possible will be possible now, and you end-up not saving very much on that front….

This open a new discussion and an opportunity.

If you remember, the analysis started because the website team found that the TMS was having too much impact on the website load. Here you/I have an opportunity to tell the team:

- We do have a problem based on our Analytics implementation – we agree

- This problem exists because the Data Layer is not standardize, not up-to-date, etc…

- As the main requestor of Launch optimization, help us achieve it by upgrading the data layer implementation

Unfortunately, this kind of reasoning may be heard as of a boomerang blame… and you want to avoid that. If this happens, team-ego-war will eat time and do not solve the issue. So be careful.

I hope this article was helpful to you, I know it probably didn’t bring major new info to you. This is a big recap of the knowledge I know on Launch and apply to a large property analysis.

I have to say that I didn’t acquire this knowledge overnight or by “an Adobe training”, this is mostly coming from people sharing experience, knowledge and doing their own tests (me including too 😀 ).

Thanks to (in no specific order), link to helpful resources attached: