In this blog post, I will try to gather all of the different knowledge topics that I think are useful to know when working on Web Analytics and are not so cover by regular blog posts on the topic. I will not go into details for each one because most deserve their own blog post. If you are interested in some topic in particular, let me know in the comment, I will consider it for a blog post later on.

It will be very a practical list and I tried to make it happen so you have a bit of knowledge on each of them after reading it, you would still need to work on it on your own if you wish to master them.

This is all of the things that I feel makes you a whole as (web) analyst, or at least working in the online world. I have been very lucky in my career as I was able to go from Marketing, to online Marketing, to (technical) SEO consultant, to web analyst, to testing manager, to data analyst and now doing a little bit of data engineering.

As the saying goes : “Jack of all trades, master of none…”

As a disclaimer, you don’t need to know everything on that list to be a digital analyst. I just happened to know all of that because I am curious and was very lucky.

When you start on that field (digital analytics), there are lots of books explaining you what is a page view, what is a bounce rate, how to look at data for users or unique visitors. Segmentation is often a topic as well as targeting but little they tell you about API or DSP…

If you are into digital analysis and are lucky enough to just look at the data, you will sooner rather than later hit the wall of misunderstanding because without the context of your data, there are assumption that you will take and they will be your downfall (or not). Therefore understanding your data and what other people are trying to realize is more important than knowing the formula of KI2-test by heart (sorry if you worked hard at school to achieve that and are reading this).

Marketing things

Publisher / Advertiser

What publishers and advertisers are is a very basic understanding in marketing but it can be quite abstract when you are outside this field. The advertiser is usually the one that wants to advertise on something and the publisher is the one having the will to display that on his medium.

In the online world, the advertiser is usually the one doing the creative and the publisher is where the ad is being display.

Advertisers are usually individual organization that compete against each other for a specific audience.

However, publishers can be quite different. There are obviously big websites with lots of traffic and an identified audience (GQ per example : mostly men with interest in fashion, sport, etc…)

In other hand, when you are a small website, it is very hard to find any advertiser that wants to make business with you. Therefore it exists grouping of website that gather small website to create volume of impressions that you can sell. (btw : the full number impressions that you can offer is often call the reach – as how many people you can reach)

These group of websites are called Ad Network, yeah, they are publishers but they have “Ad” in the name… I know very confusing… The most famous one is Ad Sense from Google.

On top of that, if you are interested to realize even more margin and allow advertiser to realize micro targeting on your profile, you can join an Ad Exchange.

There you can give advertiser the choice to use RTB on a DSP to acquire your identified user.

Confusing? Don’t worry we will go on that part next.

DSP / SSP / RTB

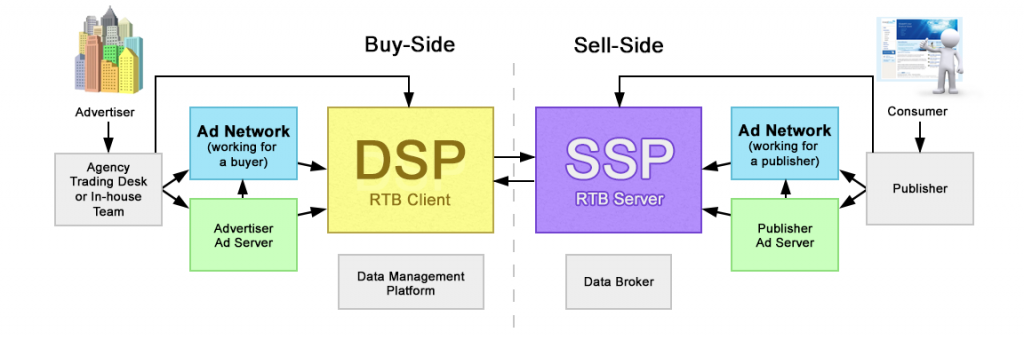

A DSP is a “Demand Side Platform” that allows the Advertisers to request and pay for impression, most likely in a RTB format. RTB stands for Real Time Bidding, so that advertisers are competing against each other on each impression.

“What to bid on?” you could ask and this is where cookie in place, the cookies that you get from your browsing experience create a profile that tells the advertiser if you are an interesting target (or not). How much he is willing to bid to acquire the display impression.

The most famous DSP platform is Adwords, except that you don’t compete for users but for keywords… How to optimize your campaign for this platform is a combination of statistics, ingenuity and magic…

SSP is the “Supply Side Platform” that is actually offering the different impressions the advertisers want to compete for. It actually is using a lot of technologies to makes everything quite fast so the user is barely noticing that this world is in competition for his attention. it is the other side of the mirror for the Publishers.

You have a nice introduction on those elements here.

CPC / CPM / CPL / CPA / eCPM

When you dig into the different strategy to realize online marketing, you may enter into the (buzz)world of cost models. I will go one by one and try to give you a bit of an explanation for them:

- CPM : Cost per mill : In the old world (but it still exists) this was the most common way to buy the inventory (the impressions) from a publisher. You usually pay per a thousand impression.

- CPC : Cost per click, advertisers started to realize that the impressions may not worth their price if no one is clicking on it. This realization

came very quicklytook some time but it is now more or less the standard and negociation around new deals always try to include a CPC price or a eCPM (which is based on Lead). - CPL & CPA : Cost per Lead or Acquisition is the cost you are paying for the acquisition of a new customer. It is quite hard to set a priori because of lack of data but this is common to compare how efficient are your channels. At some point, Publishers are even showing an eCPM which is based on that.

- eCPM : effective CPM, this is a calculation to define how much you are willing to pay for a thousand impression but you are still bound to a CPL model. It is usually define by the publisher based on how much revenue is being generated by the ad.

The formula goes as follow : (total earnings/total impressions) x 1,000.

To be able to measure that, you need to send information back to the Publisher and we will see that later.

Affiliation

Affiliation is a specific type of publisher that are showing ads and get usually commissions based on the sales they create. They are a bit different because they usually create specific content (complete websites) based on the product they are affiliating for.

Per example, you have a skateboard micro website that has lots of links to Vans. Usually there is a tracking parameter attached to these links and that helps defining which affiliation website is bringing Vans the order (or anything else). This micro site is getting paid a part of the sale back. It follows part of the CPL logic.

It is also a bit different because they can also use the other marketing methods to bring the user to their website and then to the affiliated website through their links. Depends how much you are spending on the traffic source but it can be quite powerful.

The affiliation websites that works the most are the one having coupon code to offer. This is creating a lots of qualified traffic but at the same it trims your benefit…

SEO / SEA

SEO and SEA are mostly, for Europeans, Google battleground. SEO stands for Search Engine Optimization and SEA for Search Engine Advertising. There is also a SEM for Search Engine Marketing, that regroups everything together but they are so different that it makes little sense to try to explain both in one word.

SEO technics are focusing on (great) content generation in order to answer to some queries but it also includes a lot of technical optimization. It can come to the website architecture, log analysis to see where Googlebot (crawler from google) is going and server configuration for caching. It is said to be the free channel and for being a SEO consultant for some years, I can tell you that this is not true.

SEA is the paid channel, you are competing for the keywords on that platform that is Google. I don’t know if you have ever heard of it but it is very popular so it can generate lots of traffic but you will soon discover that this is also popular for Advertiser and therefore it is quite expensive to depend on this.

Technical things

Now this part will be focused on more technical things that you should know. As explained earlier, I will not go into very much details. If you want to know more, you can either research on your own or ask questions on the comments. I will consider them for later posts. I will just bring you topics that I feel are interesting for you to know so you can have a better vision on how the whole web architecture is working.

Pixel Tracking / Request

To do a connection with the marketing explanation I was giving earlier, I will start by explaining quickly what a pixel tracking is, as it is related to marketing activities more often than not. The pixel allows you to send information back to other servers, and you can guess that publishers usually ask advertiser to set one of their thank-you page in order to calculate their eCPM (or any other metrics).

Why is it call a Pixel tracking, it is because you are usually requesting for an image through a GET method and set parameter information attached that define the information you are sharing to the partner. The image is a pixel of 1×1, invisible to the users.

Actually, analytics tools are (mostly) using the same technique but in a more dynamic way as they are often building a JS library and you can send, not pixels, but request.

However, the principle is the same and you are requesting some pixel (but sometimes you do POST stuffs) and that permit to send information to the analytics server that process this information.

Browser Server interaction

This is a very broad subject but you should be aware about the extend of what a front-end and back-end is. The browser is rendering information that has been passed by the server. From these information, some front-end logic is applied and is permitting your webpage to works properly (or not).

Common misunderstanding I see from non technical people is that they are confused by Java and JavaScript. This is a good example because Java is used in the Back-end environment, in other words, on the server.

JavaScript is used on the front-end of your page, to render the page so to say.

Servers can be very powerful machine that are running in the background and fetching information (using API – see below). They tend to be set in cluster where they are serving browser requesting information.

Browsers live in the user machine and have only a local copy of the information you are providing on your website. There are some optimization (caching) that optimize the amount of information the browser need to ask your server on each webpage. This is a very interesting topic but quite large to describe here.

The important thing to understand is that : If you are not seeing the information being transmitting in the network between your browser / application to your server, they will not be seen in your analytics tool.

API

API is the future, so if you want to work within the online industry, I would definitely recommend you to spend some time learning what are their capabilities. We will do that here as well but this is just an overview and if you need to work with some, it is quite an important skill to develop.

API are application programming interface and it actually means everything that is accessible through a set of methods (also calls functions in other context). There are famous API that you may have heard of : the DOM is the Document Object Model API.

You can request different information from the page based on the DOM.

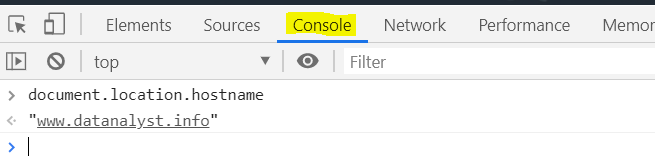

As an example, if you open your developer console in your browser (Ctrl + Shift + I) and type document.location.hostname, you will access the DOM API and retrieve the domain of your domain.

You can try it now :

But more often, APIs enable you to connect to applications and automate some usage you have with these applications.

On this website, I explained some API wrapper I have done on python. They are used in order to access and request data from Launch or Analytics per example.

Would it be possible to access that information through the UI (User Interface) ? Yes.

However, it usually takes longer time to retrieve the information that way (through the UI), even if it is easier.

Setting up an API is time consuming but once this is done, it is much more scalable than using the UI. As explained in different articles, more and more solutions are built API first. This means that they are just API with an interface on top of it.

The important benefit of it is that you scale your business easily as the features available for the clients are just a display of additional API methods.

All the API (should) comes with a manual that explains you how to use it. Your question could be “How can I start to use these API”, the simple answer is “read the manual”. In reality it also require lots of perseverance to succeed as it is not that easy. But if it was easy, being a developer would have been too easy as well.

If you want to know a bit more on the APIs, there are 3 main types of API that you will get to hear about:

- REST API : This is the standard and what you should work most often. You can read the Wiki about that architecture. To do short and simplify a lot, it uses an endpoint (destination when you do a request) per method it supports and it uses JSON format (mostly). We’ll see that JSON is a term that you should understand.

Endpoint means that the request you do to Analytics reports and segments are not directed to the same service. - SOAP API : This is legacy API, the main difference vs the REST API is that it uses the XML schema as default. There are other main differences but I hope you will never work with it. When you hear it, it means that the application is pretty old.

- GraphQL : It is the latest API standard and it may be the future. The may difference vs the REST API is that it is using only one endpoint and you define the service you want to use in the request. Which means that you can use multiple services in one request (you see the gain here?).

JS / HTML

If you want to work in online data analysis, you HAVE TO know a bit of HTML and the best is that you catch up on some JavaScript logic. Understanding the back-end and front-end logic.

I think there is way around that, but in that regards, here are some elements that you should know by name and what they stand for:

- DOM

- JSON

- jQuery

- Node / Nodejs

- Header

- Body

- Footer

You don’t have to know how to use those elements but there is a minimum to understand when working with Analytics that you should know so you can follow where the problem lies or how things are linked together.

HTTP Response Code

One of the easy thing to learn on the technical side is the HTTP response code that are being fired from the page. That can help a lot to understand what is going on on the website you are visiting and understand the error that have happened.

There is a full list on Wikipedia about that and as always it is quite exhaustive : https://en.wikipedia.org/wiki/List_of_HTTP_status_codes

However, I would pick the cluster of these response code and give you a quick definition so you have learned something from this blog post.

- 1XX : 1XX stand for all of the 100, 101, etc.. all the reponse codes that are between 100 and 199. In that case, you shouldn’t encounter those ones very often so we can skip them.

- 2XX : This usually means that the server has answered the request and has deliver a response. The most famous one is 200, everything is clear. One that can be useful is the 204 when you don’t have anything but don’t want to throw an error.

- 3XX : This is the server answering that he has done something about your request but he has worked on it. The most famous one is 301 : Moved Permanently, you are telling the browser, the resource that you have requested has been moved permanently and it is now here. 302 is the same redirection but the resource may come back. On SEO you may want to tell also when nothing new happened on the page, in that case you can use the 504.

- 4XX : This is the server telling that there was an error happening on your side, client side (you are the client of the server). The most famous one is the 404 telling that you have requested a resource that cannot be found.

- 5XX : In this scenario, the server tells you that there have been error happening on his side. I am sorry, I messed up. The most famous one is the 503 where something is blocking the server.

Analyst things

Users

What is a user is deep and philosophical question when you are working on an online environment. For some (Advertisers) it is a cookie, so the cookie represent a unique user and more cookies mean more users. For other (Mobile) it is a Device ID that is bound to the device that you are wearing. For the main website, it can be a customer ID, so in their SQL database they have a unique key attached to name, address and so on…

The problem with the first method is that 3rd party cookies are very hardly fought against now so it is not representing a complete state anymore, also each browsers has its own cookie so same user, in the same laptop but 2 different browsers means 2 different user.

The problem with the 2nd method is that not every application is consumed with a mobile and this concept of device id cannot be back-ported to Desktop or other application easily. Also if 2 different users are using the same device, they are only one person.

The customer ID is what is the most faithful to the common understanding of what a user is, however in order to retrieve a customer ID and it to be assigned with a name (and address), the user needs to realize certain steps… What are you doing for the users that didn’t meet these criteria ? The unknown users ? The Non-buyers ?

There is nothing to really know on this part but having these questions in mind when people are taking about users or persons, or customers is important. Depending the background they have and what tool they are working with, it will mean different things.

SQL

If you are digital analyst, you will need to know SQL at some point on your career, at least some basic concept are required so you understand how data are being structure internally. If you are a bit into that topic, you may have heard of NoSQL and that SQL is not really needed anymore, however, this is not true.

First because NoSQL means not only SQL and not NO SQL.

Second because SQL is such an efficient mechanism to store information that it will take long time before it dies.

For a long time, the main advantage of NoSQL vs SQL was the possible to spread you data into different servers and make request faster. SQL was a monolithic bloc where NoSQL was faster and more agile, also the Schema definition (important term that you should look up, here per example) was more flexible in NoSQL because of its JSON structure (we come back to that term, you should really look that up). This is less and less true, there are distributed databases now (so into multiple servers) and some database structure are now allowing JSON structure in their schema (PostgreSQL).

Knowing how to realize some basic SELECT statement is important skill, there will always be people better at optimizing the request but there are some basic that can make your life a lot easier/faster. With the Adobe Experience Platform coming in the future, the Query Service Feature will enable you to query the dataset (Analytics / Target) directly. Therefore this skill will be quite useful directly there.

At the end of your basic learning, you should get to know how to do a SELECT with WHERE and a JOIN. That will get you started on retrieving the data you want and you can re-work it afterward on you.

Excel

This tool is a must, there is not much to tell about it but if you are not familiar with Excel, you should start looking at tutorial on how you can do easy analysis with it. In a perfect world, everything would be done in your Analytics tool but as nothing is perfect, you will probably, or your boss would want the raw number in order to rework them in Excel. In that case, you should be aware of the possibility there.

To be honest, I am not feeling super familiar with Excel myself and I should invest more time on this. There are great feature such a PowerQuery or the DAX language that you can pick up and help you build very powerful analysis and even visualization with PowerBI.

If you don’t know a lot about the pivot table, this is where you start because this is easy and really boost your productivity with that tool.

Data Cleaning (Python, Regex)

You will not always have clean data at your disposal in order to realize your analysis, understanding the context is important in order to realize a good … data cleaning. Data cleaning can be realized through several means, Excel that I just mentioned above can be one of the tool, especially power by DAX or PowerQuery.

What you can also do is using a basic notepad or a programming language such as Python.

In any case, knowing how to clean your data is very important, and more efficient you can be, faster you can bring insights.

As I am a python users, I will recommend this language but you can chose the method you want to realize.

Sometimes, I am actually doing some notepad cleaning before even using python for more advanced tasks.

I also don’t do python if this is a small dataset and I just want sorting or very basic information. Excel will do the job.

One feature that I am using almost constantly are regular expression, a.k.a. Regex. This knowledge is more than important, it is vital to be efficient as I was explaining before.

I would highly recommend that you spend some time working on this, and this is quite easy actually because I am seeing everyday use cases on how to use that.

You can use regular expression in notepad++ or Sublime Text, and start from there.

One cool resource on learning it is regexone. Don’t be afraid to google stuff there.

Other things

The other things that you need to know are well explained in that post, the 7 traits of a digital analysit. However, it feels that this article wouldn’t be complete without this recap at the end. The 7 trait blog post wasn’t even done by myself but by a very good friend of mine (and a good data analyst). I tried to extract 4 things from that list in order to summarize it on my core fundamental.

Curious

This is the best advice you can have on your career and I think it applies to any job. It is even more true when you are working on a field that is always changing. The web is evolving so fast and it is very hard to stay put with your knowledge on this.

I know very few people that are not curious in this field. Only using past knowledge can’t push you very far.

Open

In this field, one of my biggest strength is that I am very open to new hypothesis, it is on par with the next trait I am going to discuss.

I have seen so many good analysts being blinded and not understanding what the data are telling them. Sometimes, you need to realize that the users are just doing random stuffs or that something that shouldn’t happened just happened.

To paraphrase Murphy Law : Anything that could happen will happen.

Critical

I think this is an important skill for the Digital Analyst, it is to be critical of your data, be critical of your analysis. Embrace the criticism on your work as way to improve, and respect anyone view on the data you have output or the implementation you have done.

There is rarely a unique answer on your analysis and there are always different way to approach a problem.

The technique that you have used may have some advantages but also some drawbacks. Defining the real goal will help you to reduce the amount of critic you want to achieve and whatever comes from the feedback is a way for you to learn.

Sedulous

How many times did I want to give up ? We could count that by dozens. This field is hard as you are always challenges by (smarter) people.

If you start on this field, there would be more than one moment when you feel so dumb because you don’t know something obvious to everyone else in the room (like the Student test shouldn’t be used on not normally distributed data… obviously 😉 )

On these days, you need to remember that everyone has started somewhere, they may not know what you know (did you know that Cleopatra is closer (time-wise) to us than to the builder of the Pyramids?), but more importantly the way is the reward.

If you actually have most of the characteristics already discussed here (curious, open, critical), you will have a blast following this path. You just need to not give up at the first (big) obstacle. Trust the process: everytime you have hard time, everytime you are out of your comfort zone, it means that you are learning.

Long read, but a really good one! Was happy to see that most of the stuff made sense here, and based on that I can pass myself as a half-decent analyst 😁